Advanced indexing settings

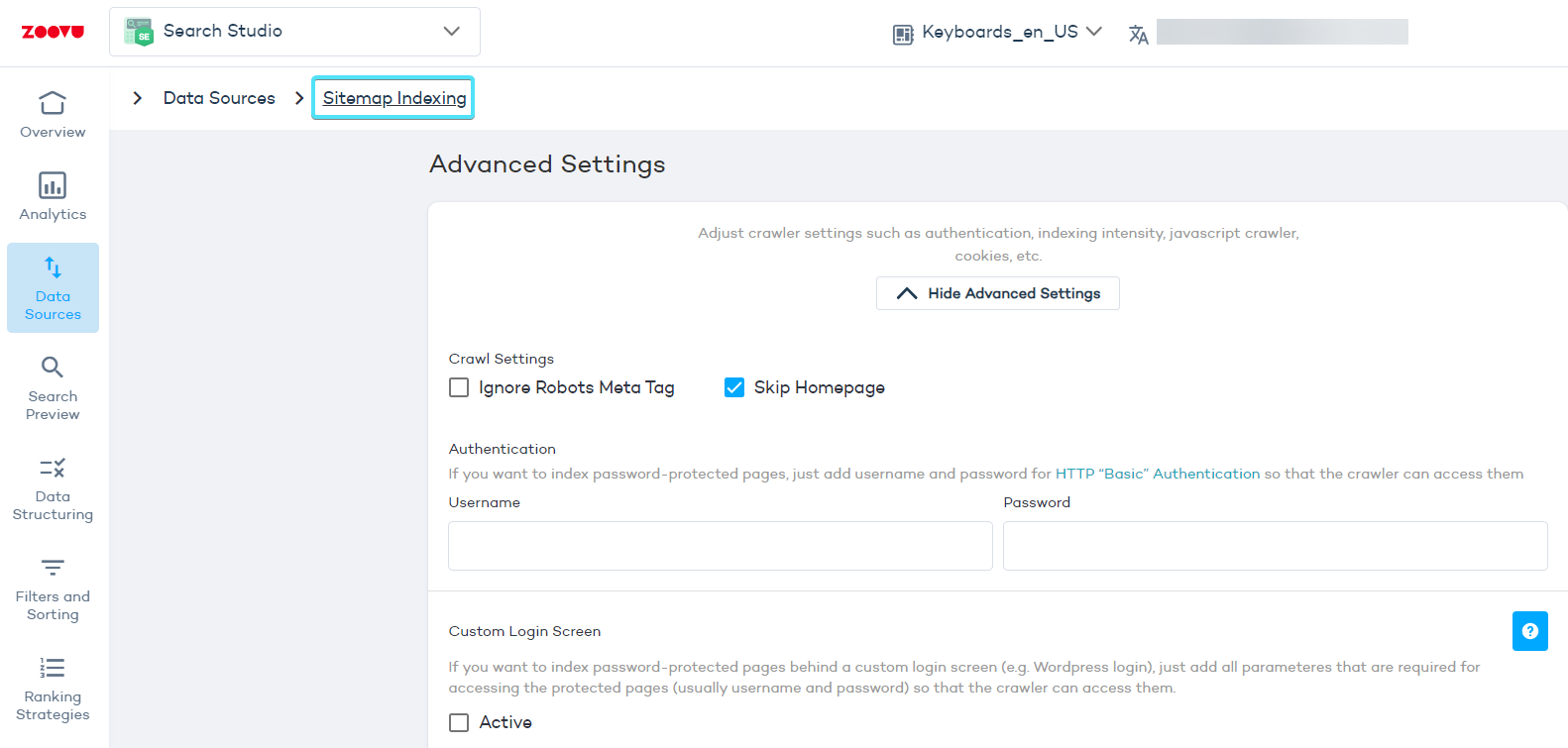

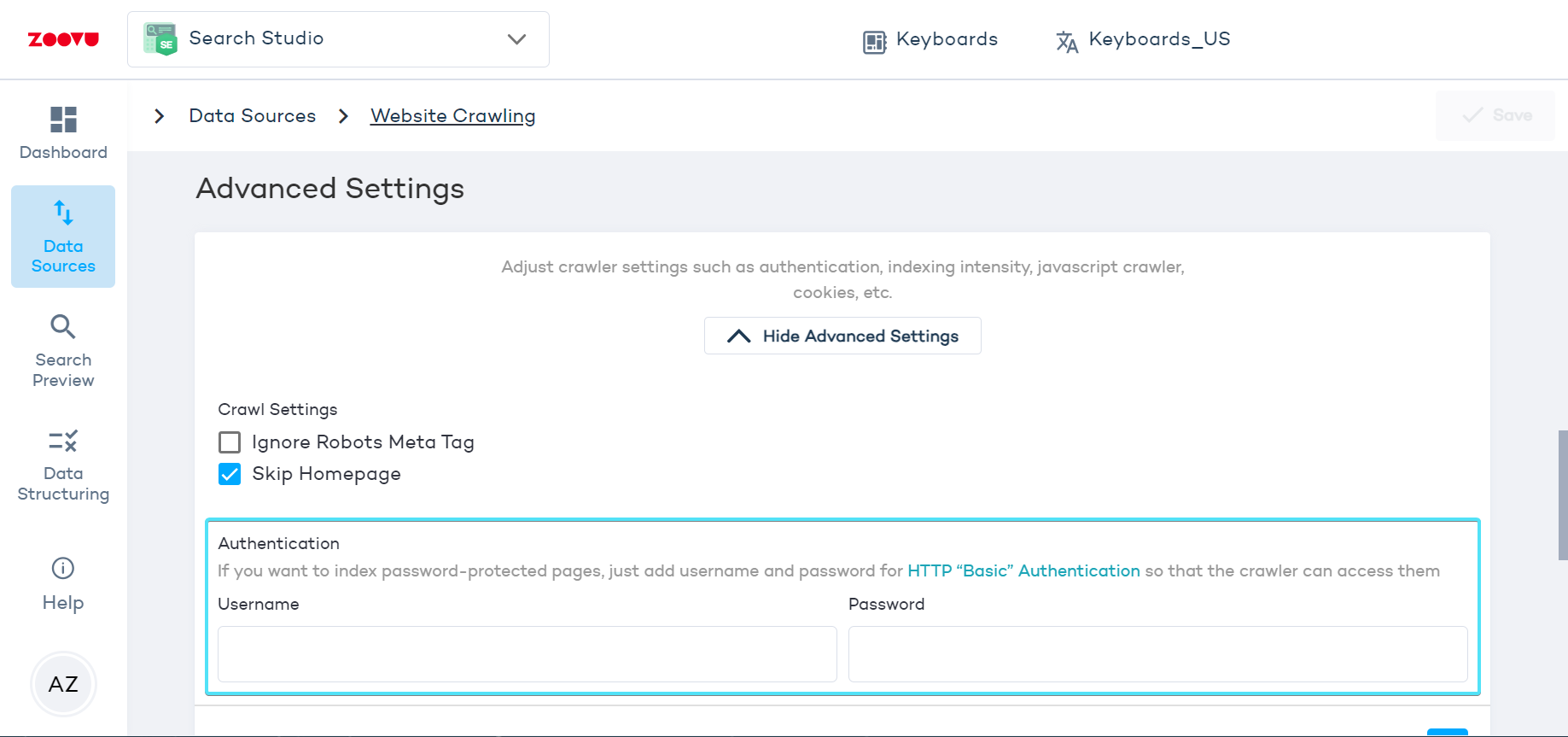

To access advanced indexing settings, go to Data Sources > Website Crawling > Advanced Settings OR Data Sources > Sitemap Indexing > Advanced Settings and press "Show advanced settings".

Ignore Robots Meta Tag

The robots meta tag lets you control how an individual page should be indexed in Google Search results. (Read more.)

- If you want to keep your site pages hidden from Google, but allow Zoovu to index them, simply check the

Ignore Robots Meta Tagbox. - If you want to keep the pages visible in Google, but remove them from your on-site search results, use blacklisting or no-indexing rules. Alternatively, you can add a meta tag to the unwanted pages:

<meta name="zoovu-indexer" content="noindex" />

Skip homepage

By default, the crawler skips your root URL(s). For example, if your root URL is domain.com, this page will not get indexed.

If you do want your homepage in your search results, uncheck Skip Homepage in the advanced settings.

Index secure or password-protected content

If you have password-protected content that you'd like to include in your search results, use one of these options:

- If you use HTTP Basic Authentication, simply fill out a username and password.

-

If you have a custom login page, use the Custom Login Screen settings instead.

-

Set a cookie to authenticate our crawler.

-

Whitelist our crawler's IP addresses so it can access all pages without a login (under Firewall > Tools):

- 88.99.218.202

- 88.99.149.30

- 88.99.162.232

- 149.56.240.229

- 51.79.176.191

- 51.222.153.207

- 139.99.121.235

- 94.130.54.189

- 116.202.85.24

-

Provide a special sitemap.xml with deep links to the hidden content.

-

Detect our crawler with the following User Agent string in the HTTP header:

Mozilla/5.0 (compatible; Zoovu/1.0; +https://zoovu.com/)

- Push your content to our HTTP REST API.

Index content behind login pages

To index content behind a login page, follow these steps:

- Go to Advanced Settings > Custom Login Screen and check the box labeled "Active."

- Provide the URL of your login page (e.g., https://yoursite.com/login).

- Specify the login form XPath.

- On your login page, right-click the login form element, select Inspect, and find its id in the markup. For example:

<form name="loginform" id="loginform" action="https://yoursite.com/login.php" method="post">

- Use the id to create the XPath: //form[@id="loginform"] Define the authentication parameter names and map them with the credentials for the crawler to access the content.

- Right-click the login field, select Inspect, and find the parameter name:

<input type="text" name="log" id="user_login" class="input">

- Use log as the Parameter Name. The login (username, email, etc.) would be the Parameter Value. Repeat for the password field.

- Save and go to the Index section where you can test your setup on a single URL and re-index the entire site to add the password-protected pages to your search results.

Using cookies with the crawler

You can set a specific cookie for the crawler to use when accessing your website. For example, if you have a location cookie that determines the language of your search results, set this cookie to "us" for English or "de" for German.

Adjust indexing intensity

Indexing intensity affects how quickly the crawler moves through your website. You can set the intensity from 1 (slowest, least server stress) to 5 (fastest, most server stress).

If you need faster crawling, consider using sitemap indexing and the Optimize Indexing setting.

Index JavaScript content

To enable JS crawling:

- Go to Website Crawling > Advanced Settings and activate the JS crawling toggle.

- Re-index your site.

JavaScript crawling takes more time and resources. If some information is missing from search results, make sure to activate the JavaScript Crawling feature in your package or push your JavaScript content to our API.