Model Context Protocol (MCP) Server

By connecting your LLM to the Data Platform’s MCP server, the model gains secure access to your product data and can leverage a range of tools to perform various tasks.

To start using our MCP server, please contact your Zoovu representative or send a message to the Customer Success Team

Use cases

Our MCP server is designed for practical tasks such as:

- Resolving product search queries using AI, including:

- applying the most relevant filters

- applying the most relevant sorting options

- returning the best-matching products

- Finding related products (e.g. compatible accessories, alternative options, and more)

- Retrieving detailed product information

- Comparing products and answering other product questions with help of Zoe

Example queries for tests:

- "Is this material good for sensitive skin?"

- "Compare these two drills."

- "Recommend a laptop under $1,500 with a good graphics card."

Getting started

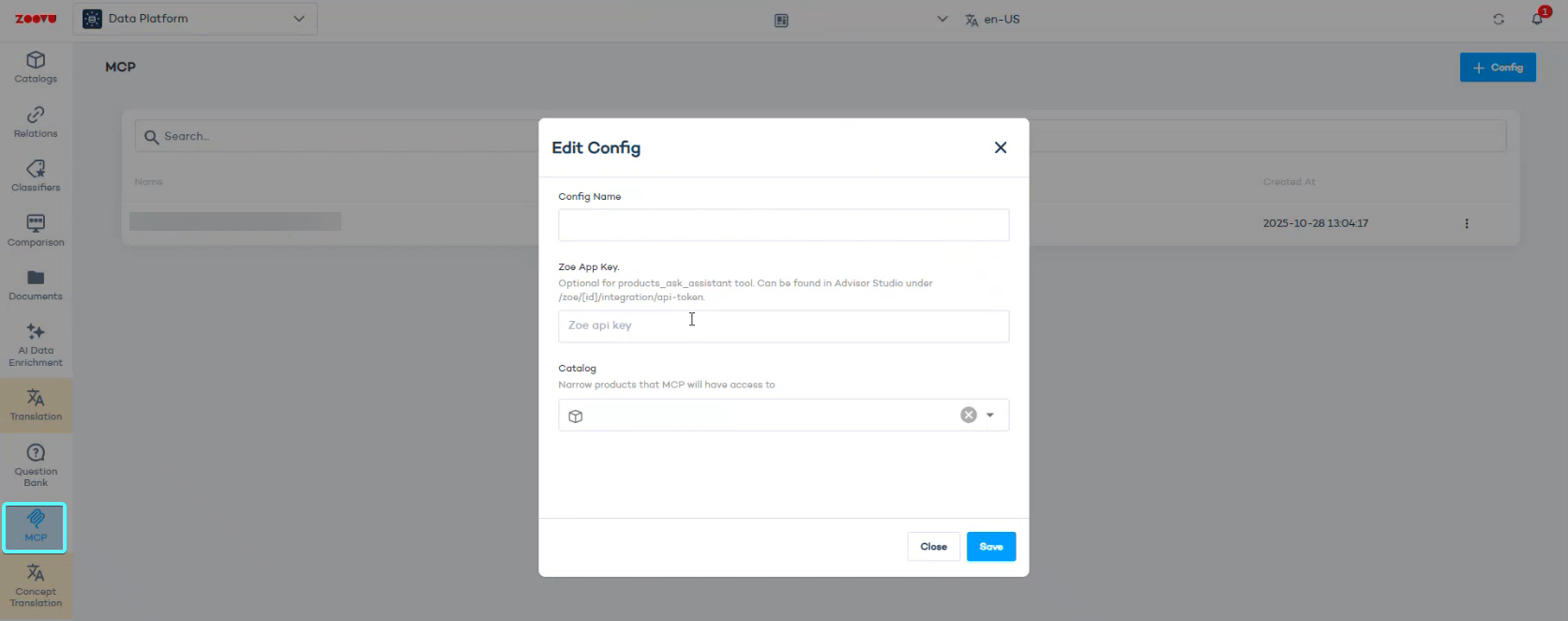

- Navigate to MCP in the sidebar.

- Create a new configuration.

- Provide a name for your configuration.

- Optional: If you want to enable the

products_ask_assistanttool, include your Zoe app key. - Use the

catalogdropdown to limit this configuration to a specific Data Platform catalog.

You can locate the Zoe App Key inside Advisor Studio, in the settings for the specific Zoe assistant, under the path: /zoe/[id]/integration/api-token. ([id] stands for your Zoe assistant's unique identifier.)

Copy the MCP server URL

- Once you save a configuration, open the three-dot menu to copy your unique MCP server URL.

- To authenticate, use your API key.

You can share this link with teammates to test the same setup.

Working with MCP tools

Once your MCP server URL has been integrated into your chosen client (e.g. Claude), the LLM gains access to the tools exposed by the server. However, certain tools will only be accessible if your project has the required configuration.

For example:

- No relations configured → no "get related products" tool

- No numeric filters visible → no dynamic filter parameters

- No sorting options configured → no sortBy parameter

- No zoeAppKey configured → no "ask assistant" tool

Product search (products_search_with_filters)

The LLM can use this tool to search your catalog based on natural language input. When a user searches with free text (e.g. "gaming laptops under $1500"), the LLM can resolve the query and set the appropriate filters, sorting options etc. to find matching products.

Filters and sorting options come directly from your project in Search Studio. Only configured filters marked as visible and configured sorting options will be used.

Product details (products_get_details)

This tool can be used by the LLM to retrieve more detailed product information when a user expresses interest in a specific product and provides the identifier (e.g. the SKU). Returns full product information:

- Name

- Images

- Categories

- All attributes and values

- Product links

Product details by name (products_get_details_by_name)

Used when more information about a product is requested, but the LLM has only been provided a product name (i.e., the identifier has never been provided in the session)

Related products (products_get_related)

This tool is used to answer requests regarding product compatibility, suitable alternatives, matching accessories etc.

Relation types are sourced from your Relations setup in Data Platform. If no relations exist, this tool is not available.

The tool returns products grouped by relation tag and relation name.

Assistant knowledge (products_ask_assistant)

This tool is used to answer conceptual or buying-advice questions.

Examples:

- "What's the difference between OLED and LED?"

- "Is this good for sensitive skin?"

- "Compare these two."

This tool is only available when your configuration includes a Zoe App Key.

Best practices

- Verify that filters, sorting options, relations etc. are configured in your project if a tool is missing.

- Use meta-instructions in the client to inform the LLM how it should display information to end users (e.g. "never show price" or "only show ratings above 4.0")

- Test how the LLM resolves a variety of queries and adjust meta-instructions to guide the LLM if necessary.